Quick Start

Web Scraping API Quick Start

Our Web Scraping API is a comprehensive data collection solution for eCommerce marketplaces, search engine results pages, social media platforms, and various other websites.

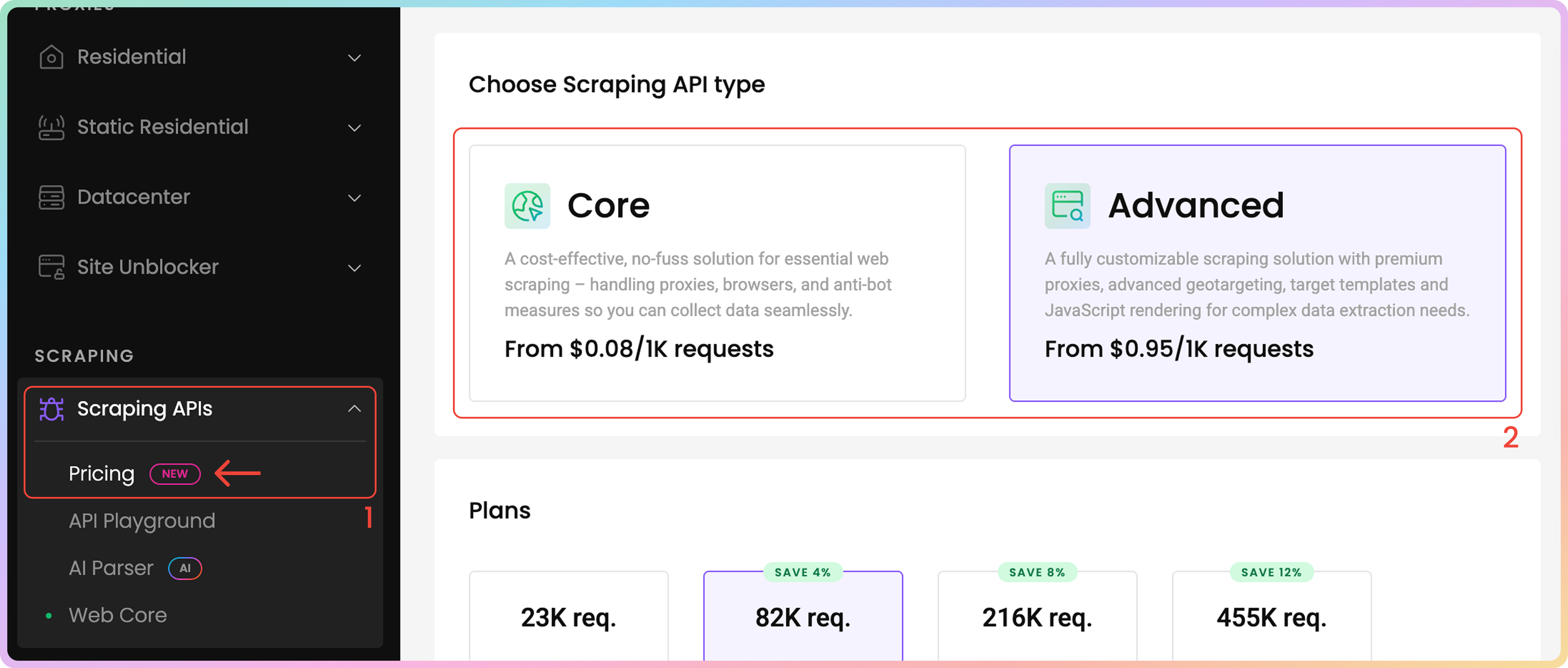

To start using the Web Scraping API, go to the Decodo Dashboard and select Scraping APIs and Pricing, where you can choose between Advanced and Core plans.

- Core: cost-effective scraping for structured web data with minimal configuration.

- Advanced: fully customizable scraping with JavaScript rendering, pre-built templates, and structured data output.

- Find a more detailed comparison here.

Scraping API Plan Selection.

Integrations

Web Scraping API supports two types of integrations:

- **Realtime **for synchronous request.

- Asynchronous, that also supports batch request, best for handling large amounts of data.

Realtime

The Web Scraping API solution uses the following POST endpoint for real-time (synchronous) requests:

https://scraper-api.decodo.com/v2/scrape

Here are some examples:

curl -u username:password 'https://scraper-api.decodo.com/v2/scrape' -H "Content-Type: application/json" -d '{"target": "google_search", "domain": "com", "query": "world"}'<?php

$username = "username";

$password = "password";

$search = [

'target' => 'google_search',

'domain' => 'com',

'query' => 'world',

'parse' => true

];

$ch = curl_init();

$headers[] = 'Content-Type: application/json';

$options = [

CURLOPT_URL => 'https://scraper-api.decodo.com/v2/scrape',

CURLOPT_USERPWD => sprintf('%s:%s', $username, $password),

CURLOPT_POSTFIELDS => json_encode($search),

CURLOPT_RETURNTRANSFER => 1,

CURLOPT_ENCODING => 'gzip, deflate',

CURLOPT_HTTPHEADER => $headers,

CURLOPT_SSL_VERIFYPEER => false,

CURLOPT_SSL_VERIFYHOST => false

];

curl_setopt_array($ch, $options);

$result = curl_exec($ch);

if (curl_errno($ch)) {

echo 'Error:' . curl_error($ch);

}

curl_close($ch);

$result = json_decode($result);

var_dump($result);

?>import requests

headers = {

'Content-Type': 'application/json'

}

task_params = {

'target': 'google_search',

'domain': 'com',

'query': 'world',

'parse': True

}

username = 'userame'

password = 'password'

response = requests.post(

'https://scraper-api.decodo.com/v2/scrape',

headers = headers,

json = task_params,

auth = (username, password)

)

print(response.text)This integration method allows you add all of your desired parameters into the URL for synchronous requests. It requires to keep an open connection until a successful/unsuccessful response is returned. Learn more here.

Asynchronous

The Web Scraping API solution uses the following POST endpoint for asynchronous requests:

https://scraper-api.decodo.com/v2/task

Here's an example using callback_url parameter. This parameter is optional but can be used to receive a callback when request is completed:

curl -u username:password -X POST --url https://scraper-api.decodo.com/v2/task -H "Content-Type: application/json" -d "{\"url\": \"https://ip.decodo.com\", \"target\": \"universal\", \"callback_url\": \"<https://your.url>\" }"import requests

payload={

'target': 'universal',

'url': 'https://ip.decodo.com',

'callback_url': '<https://your.url>'

}

response = requests.request("POST", 'http://scraper-api.decodo.com/v2/task', auth=('user', 'pass'), data=payload)

print(response.text)<?php

$params = array(

'url' => 'https://ip.decodo.com',

'target' => 'universal',

'callback_url' => '<https://your.url>'

);

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, 'https://scraper-api.decodo.com/v2/task');

curl_setopt($ch, CURLOPT_USERPWD, 'username' . ':' . 'password');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($params));

curl_setopt($ch, CURLOPT_POST, 1);

$headers = array();

$headers[] = 'Content-Type: application/json';

curl_setopt($ch, CURLOPT_HTTPHEADER, $headers);

$result = curl_exec($ch);

echo $result;

if (curl_errno($ch)) {

echo 'Error:' . curl_error($ch);

}

curl_close ($ch);

?>Queue multiple tasks with this Batch Request (Asynchronous) POST endpoint:

https://scraper-api.decodo.com/v2/task/batch

This method allows you to queue up multiple requests asynchronously and receive results at a later time or to your added callback URL. Learn more here.

To retrieve the result of your task, id from the response or callback should be used in this endpoint:

https://scraper-api.decodo.com/v2/task/{task_id}/results

Authentication

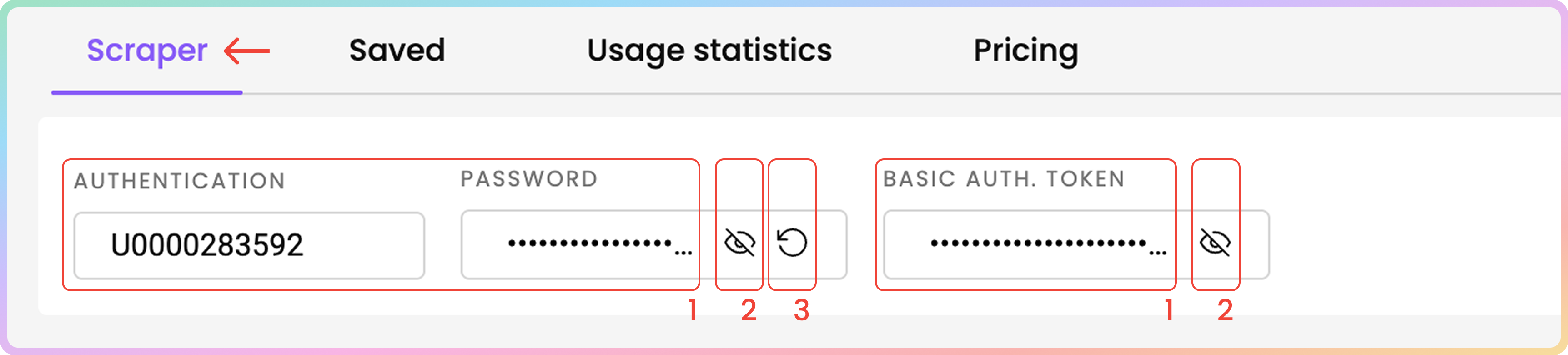

Once you have an active Web Core or Web Advanced subscription, you will be able to check your generated proxy Username, Basic Authentication Token, as well as see or regenerate a new Password in the Scraper tab.

You may copy your username or password by clicking on it

To see the password or token click the eye icon.

You can generate a new password and token by clicking on the arrow icon and choosing Confirm.

- The Basic Authentication Token is a combination of the username and password in

Base64encoding (Base64encoding is not encryption - it's easily decoded).

Scraping API Authentication Management.

Dashboard Core Scraper

Core ScraperHere, you can try sending a request to your chosen target right from your dashboard:

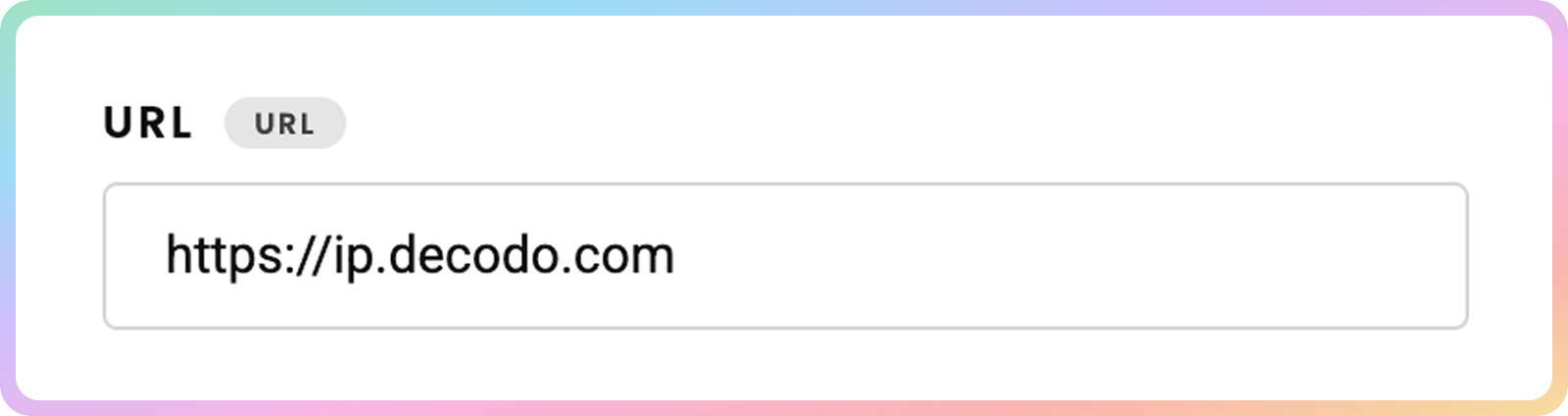

URL

- First, enter the URL of the site you wish to target.

Location

- Choose the location from which you'd like to access the website.

- Depending on your desired location, the Web Scraping API will add proxies from the Decodo IP pool.

- The Web Scraping Core solution support 8 countries.

- Learn more here.

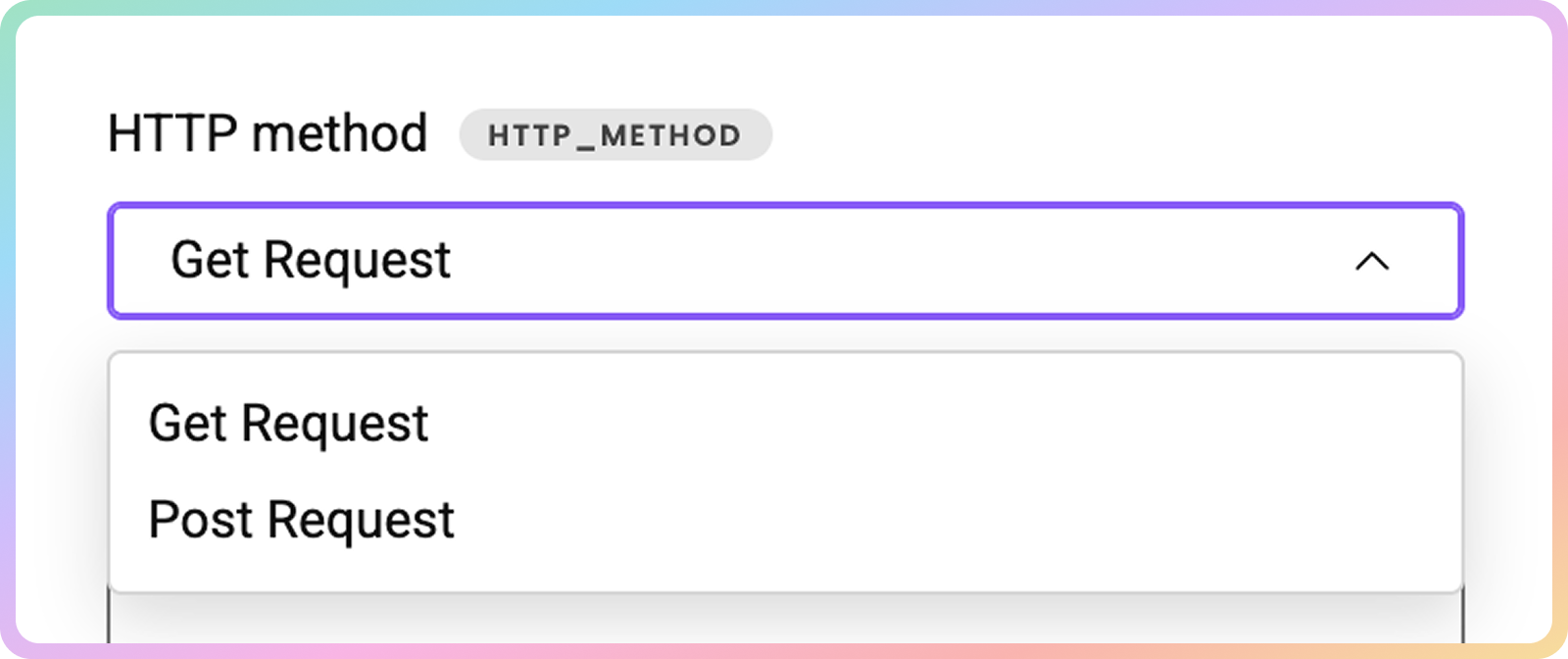

HTTP Method

- Then, you can choose the

HTTPmethod.GETis the default one; however, you can selectPOSTif you want to pass a payload with your request.

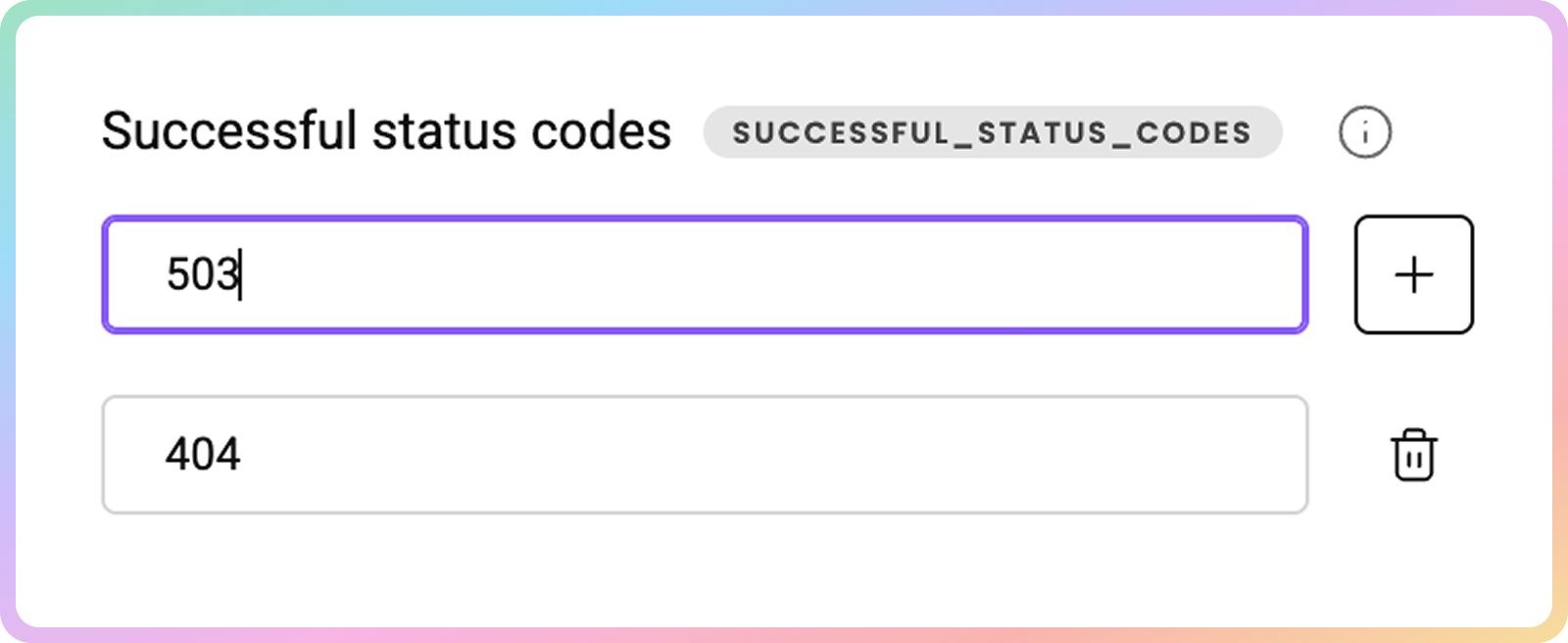

Successful Status Codes

- Finally, define one or more

HTTPresponse codes that you consider successful for retrieving the content.

- Sometimes, websites return the required content together with a non-standard

HTTPSstatus code.- If one of your targets does that, you can indicate which status codes are acceptable and valuable to you:

501,502,403and etc.

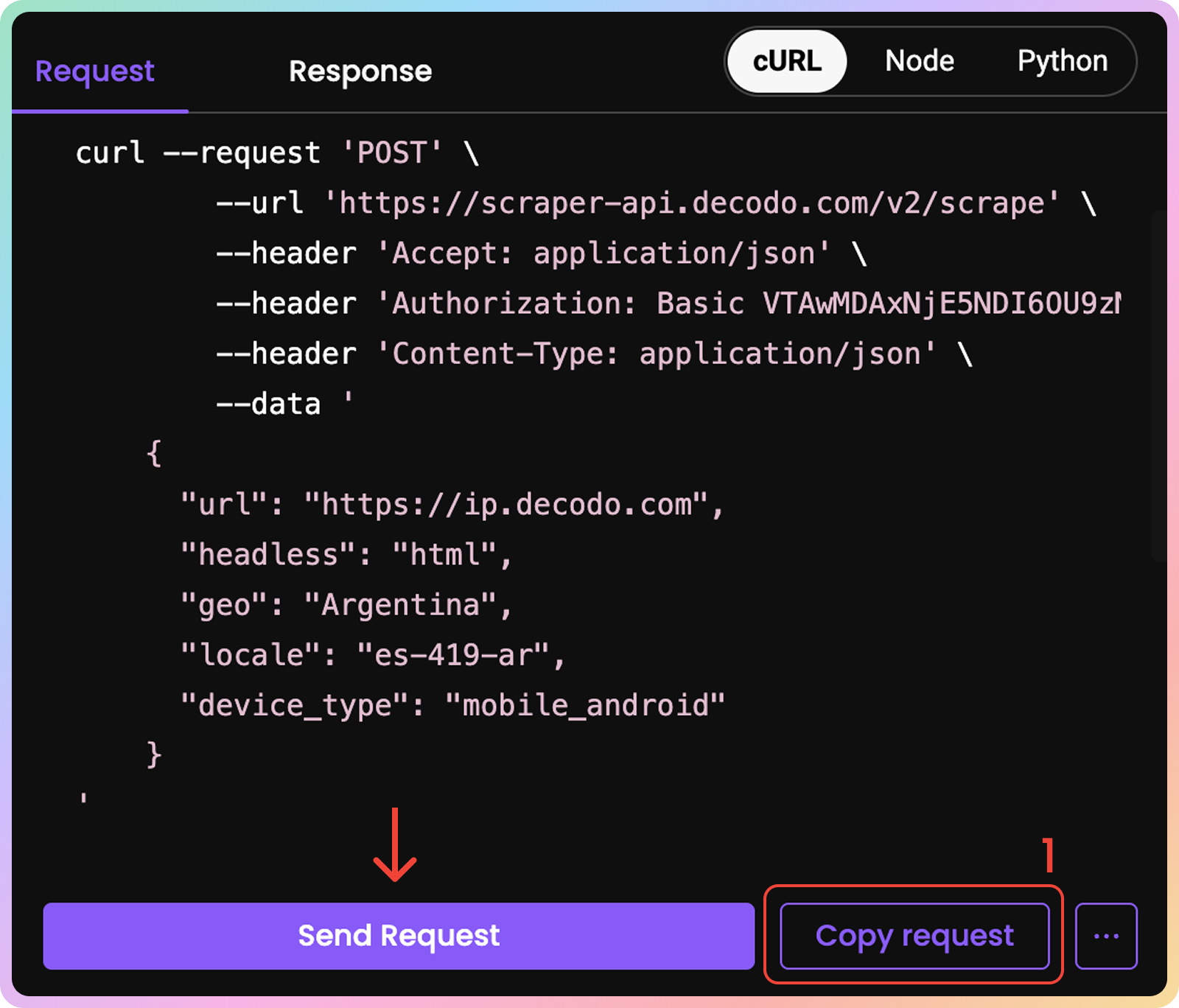

Sending a Request

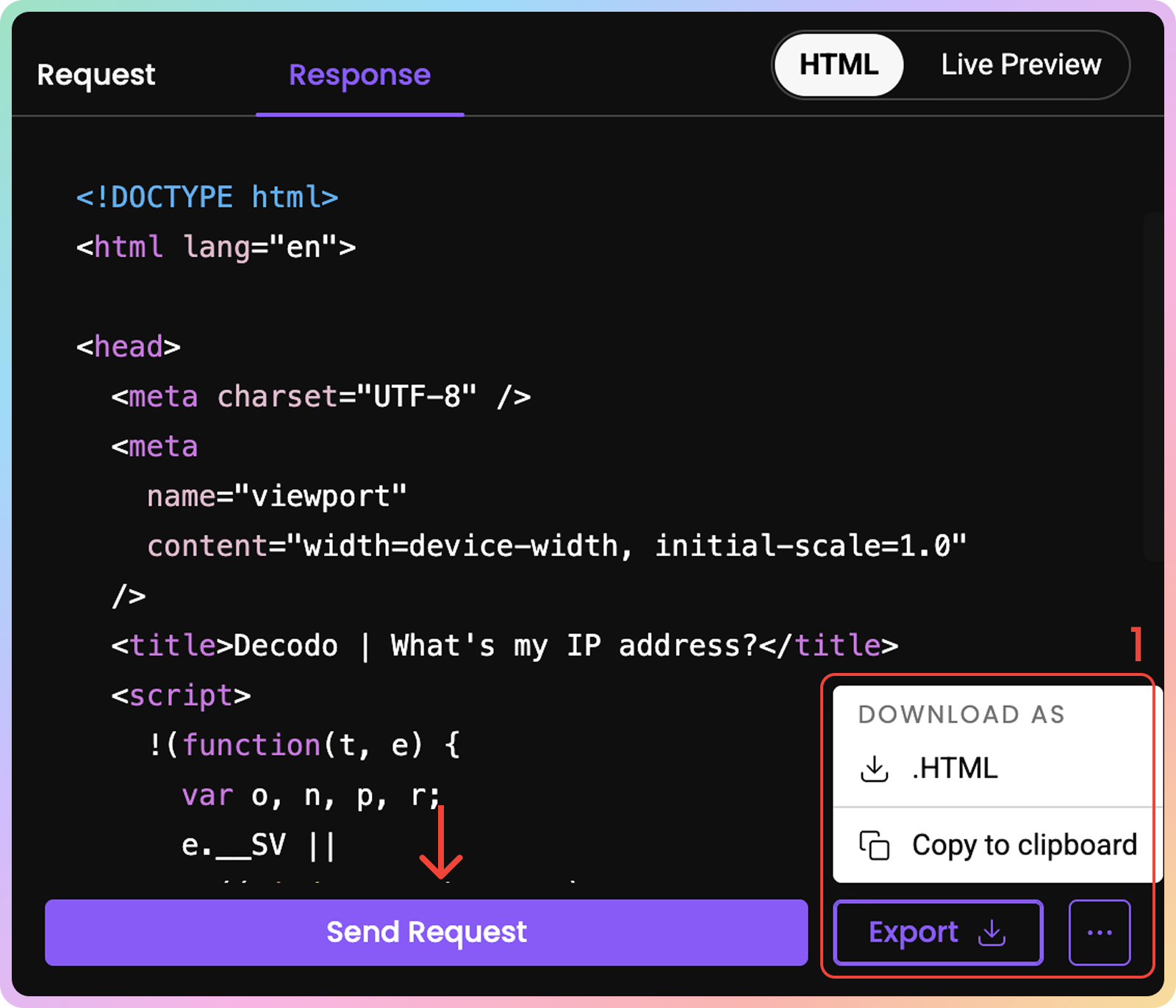

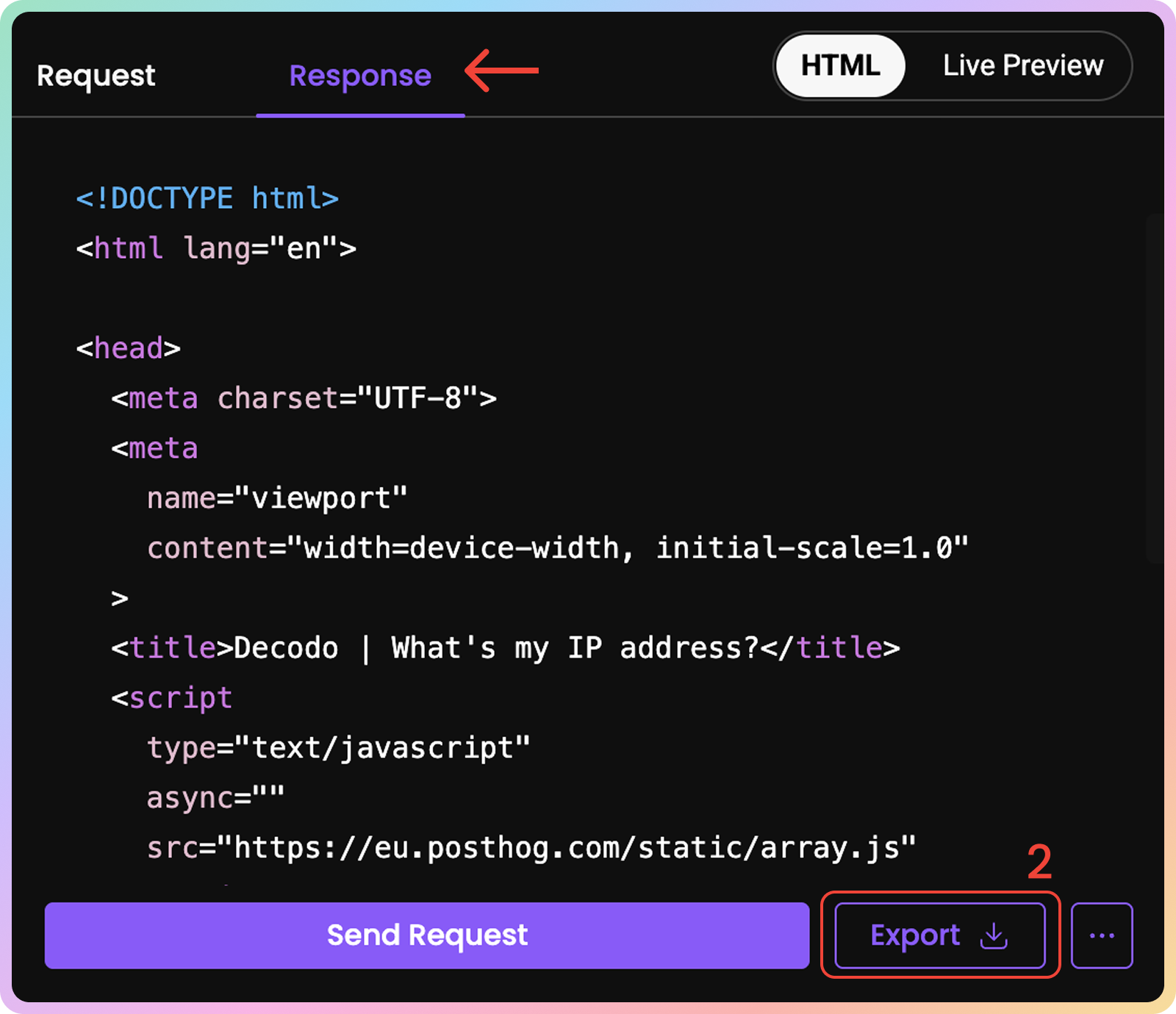

- Once you're all set, press Send Request. The raw

HTMLrequest will appear on the right.

- You can copy the response or download it in

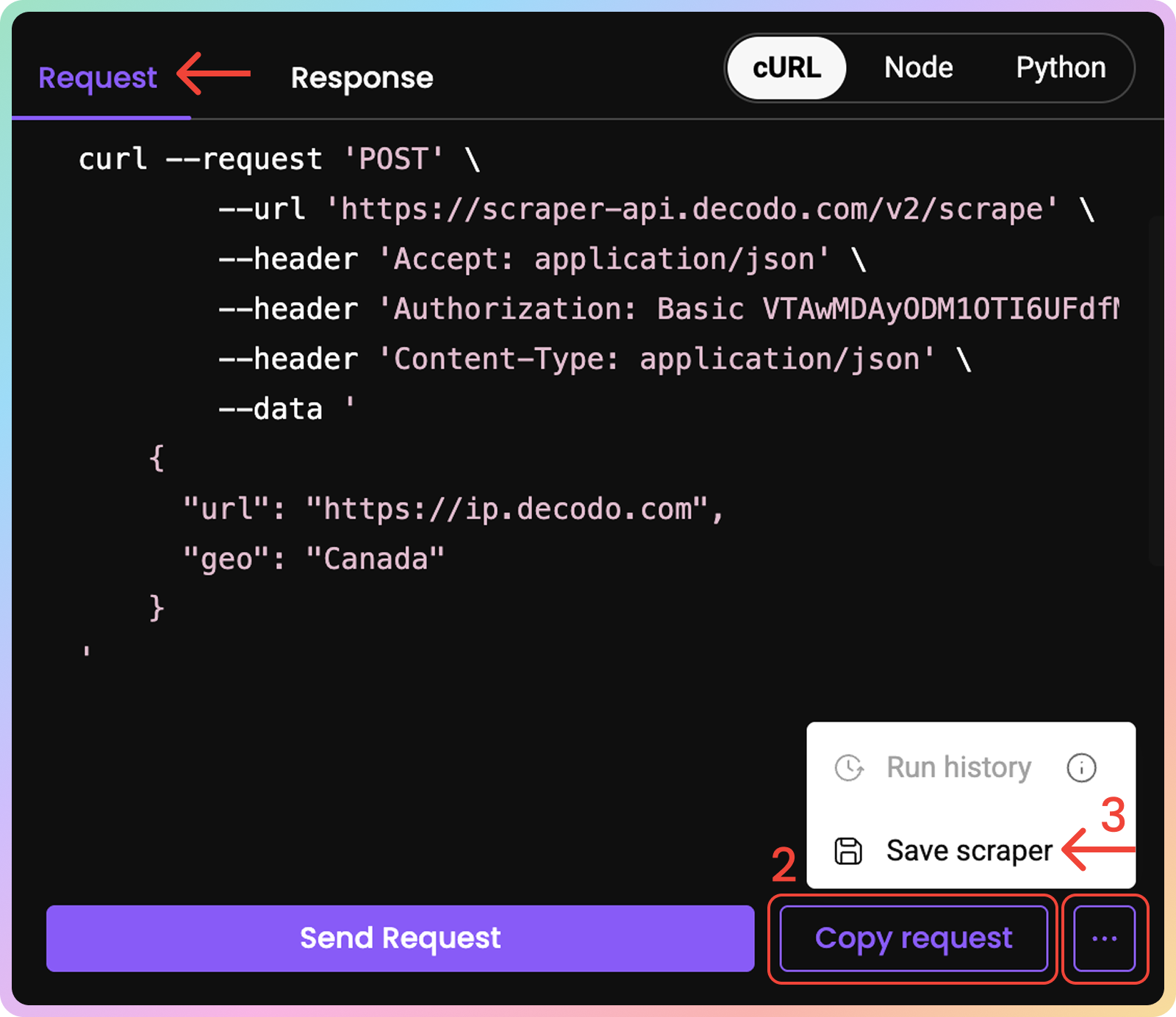

HTML.- If you select the Request tab, you may also copy requests in

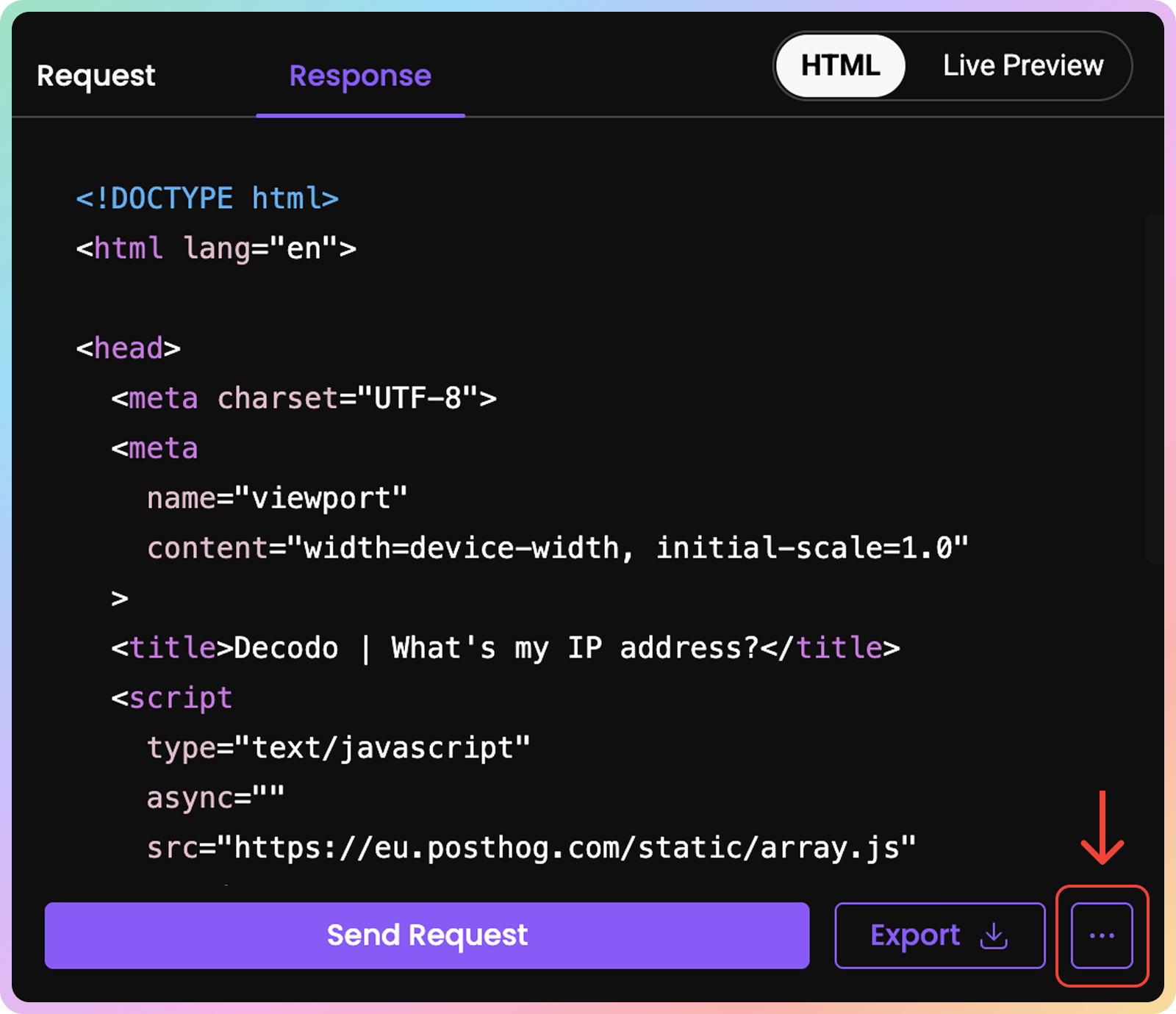

cURL,NodeorPython, by clicking Copy request.- If you need to reuse the scraper, save it by clicking the three dots button and save scraper. Then simply go back to the Saved section and access your saved template.

Dashboard Advanced Scraper

Advanced ScraperHere, you can try sending a request to your chosen target right from your dashboard:

Choose a Target

- First, you may choose a scraping template. If you don't see the target you want to scrape, select Web.

- Target templates apply specialized unblocking strategies and target-specific parameters and apply dedicated parsing techniques.

- Check out all of our templates here.

URL

- Now, enter the URL of the site you wish to target.

Bulk

- By enabling the Bulk feature, you can target multiple sites at once.

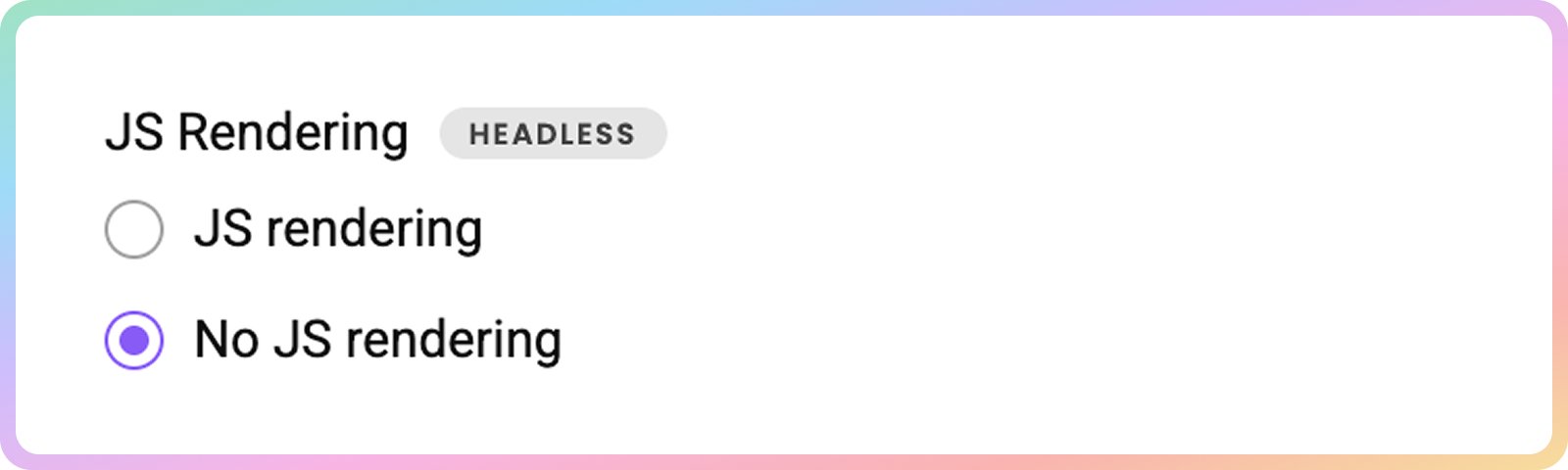

JavaScript Rendering

JavaScript Rendering- Then, choose to enable or disable

JavaScriptrendering.

- Enabled JS rendering allows you to target dynamic pages without using a headless browser.

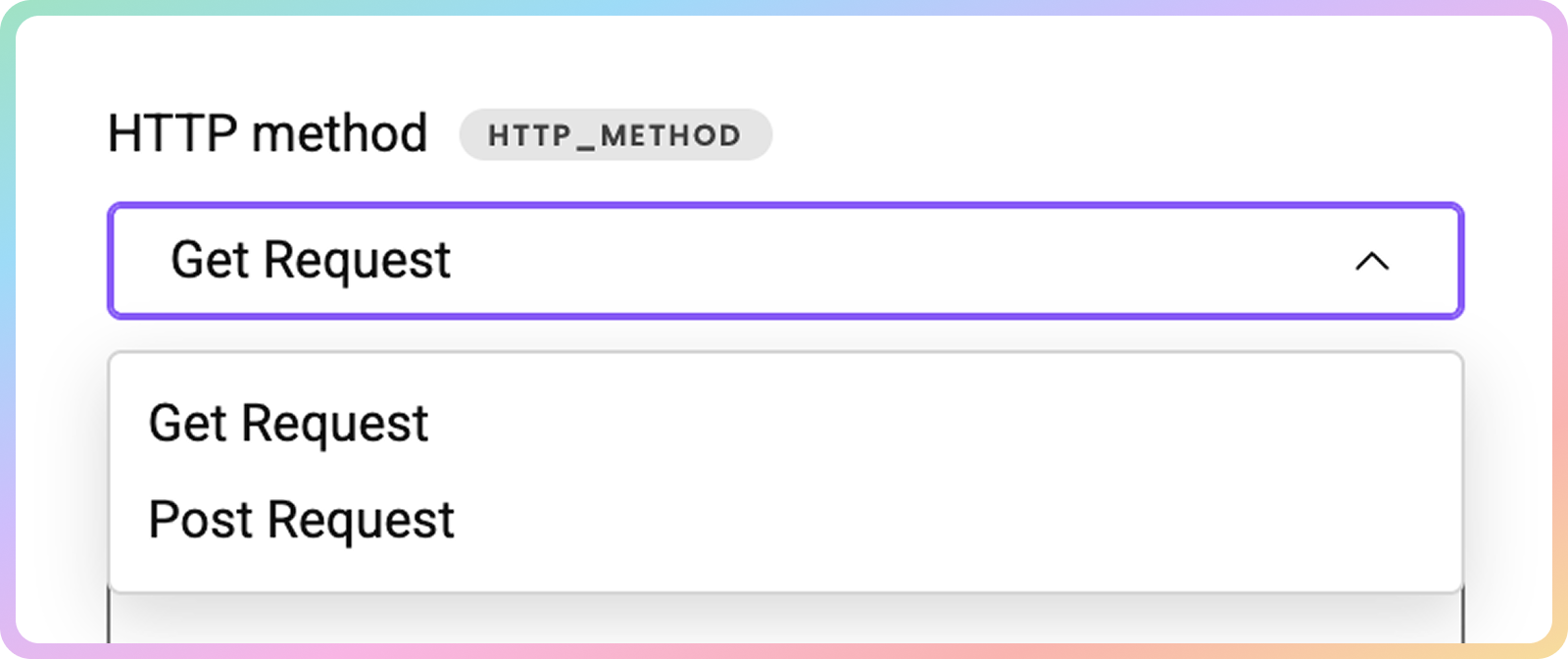

HTTP Method

- Then, you can choose the

HTTPmethod.GETis the default one; however, you can selectPOSTif you want to pass a payload with your request.

POSTfunctionality allows you to send data to a specific website, resulting in a modified response from the target.

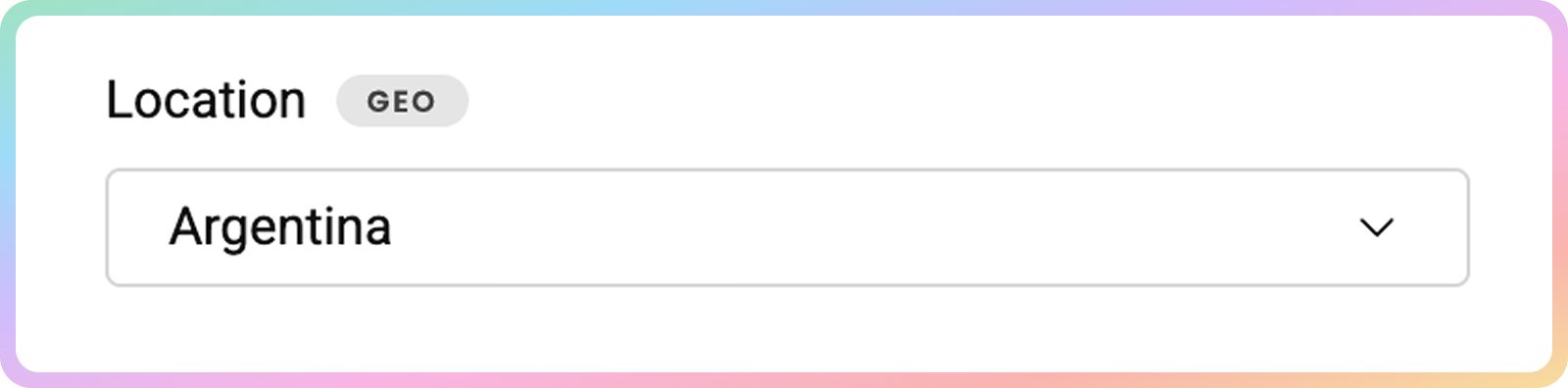

Location

- Next, choose the location from which you'd like to access the website.

- Depending on your desired location, the Web Scraping API will add proxies from the Decodo IP pool.

- Learn more about localization here.

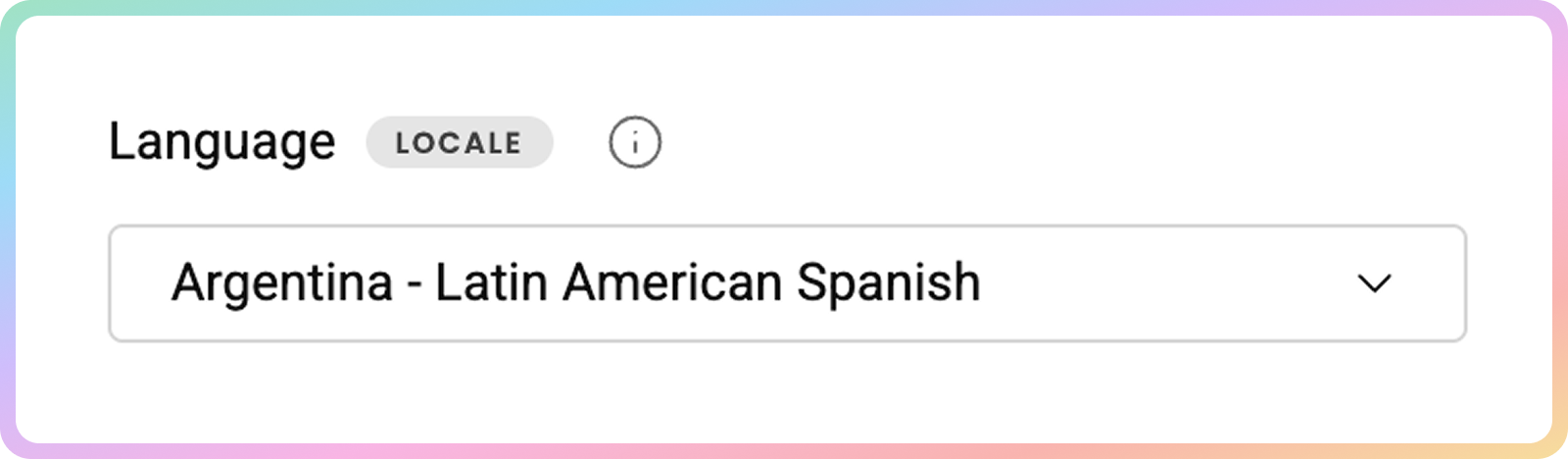

Language

- Specify the Locale.

- The

localeparameter allows you to simulate requests from a specific geographic location or language.- This changes the web page interface language, not the language of the results.

- Learn more here.

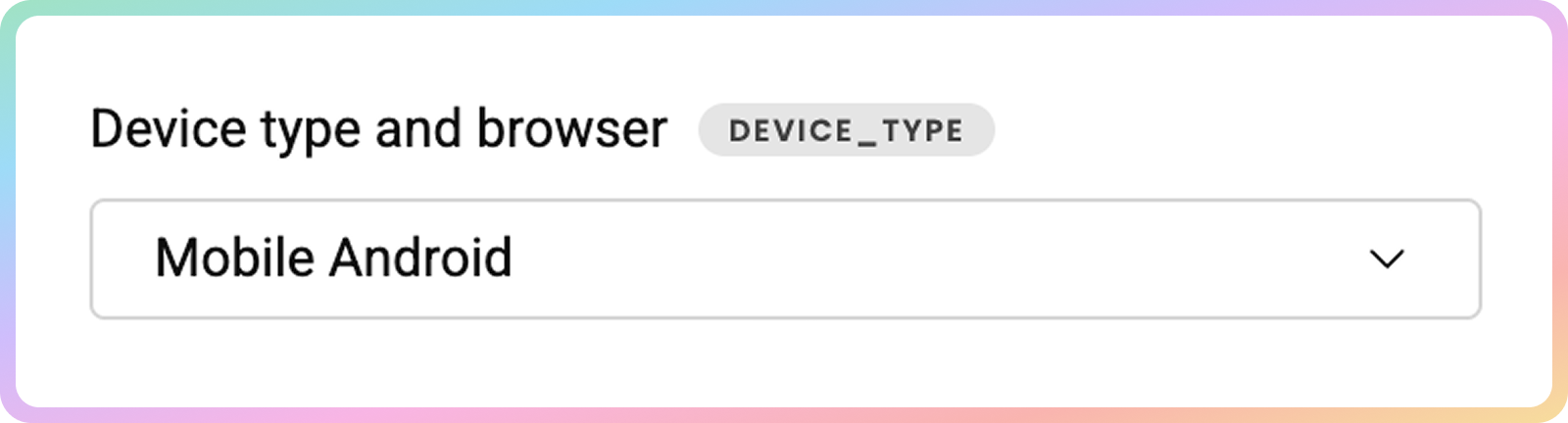

Device type and browser

- You may also specify the device type and browser.

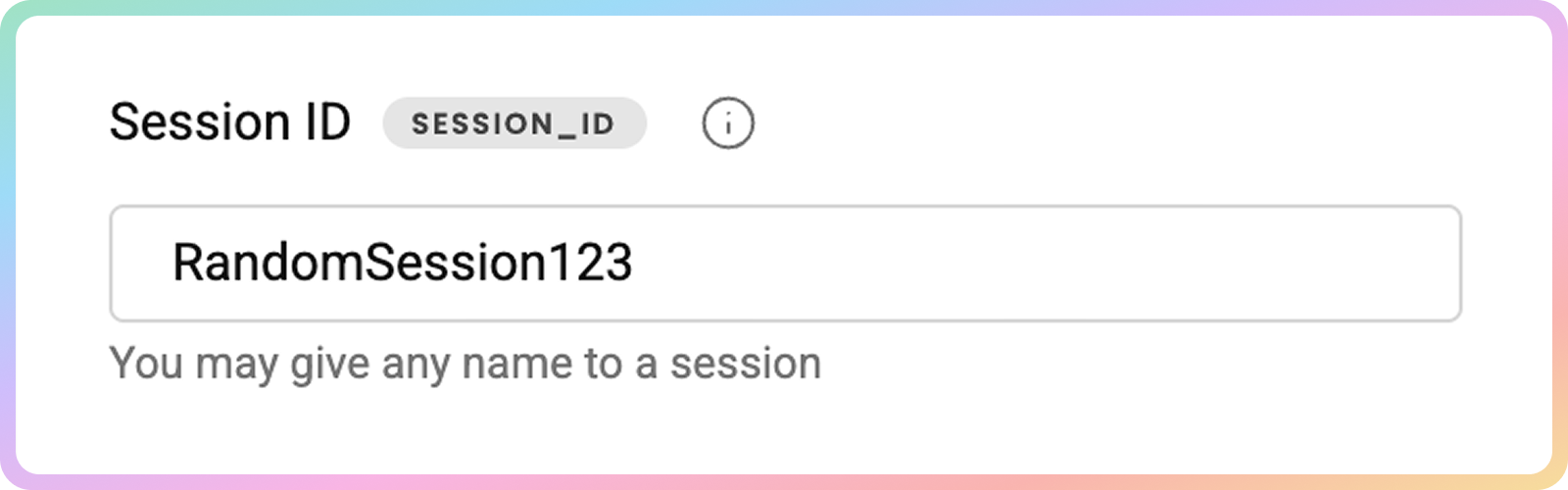

Session

- If you want to, name your session.

- The

session_idparameter allows you to use the same proxy connection for yo to 10 minutes for multiple requests.

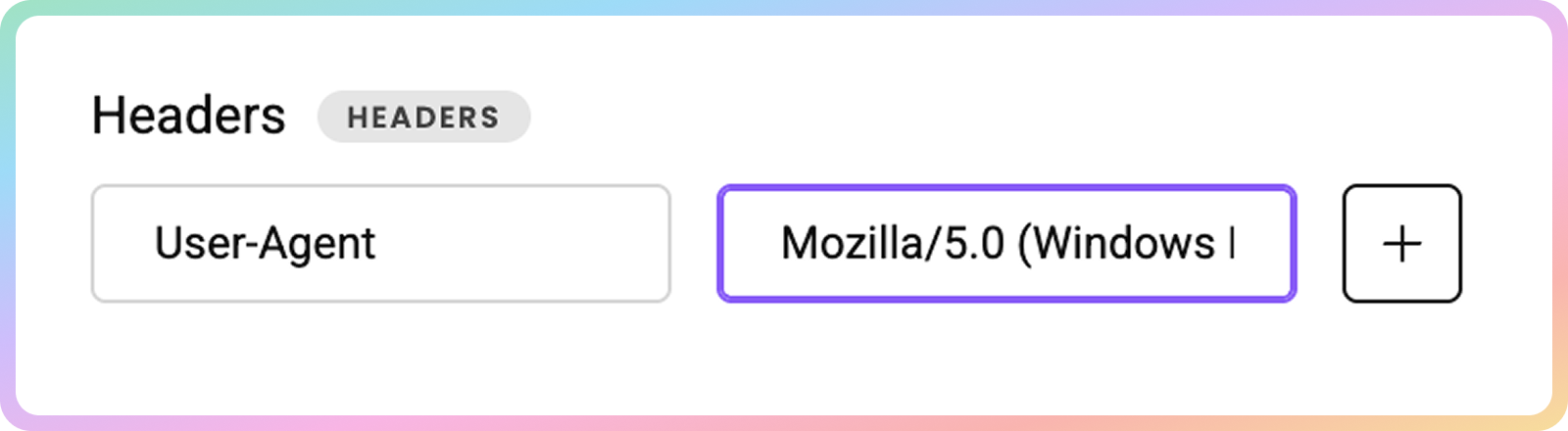

Headers

- Specify the header along with its values.

- Some headers are pre-set by default in order to increase the success rate. Enable the option below to force your own headers, however, note that the success rate may drop. Failed requests with forced headers are always charged, even if scraping fails.

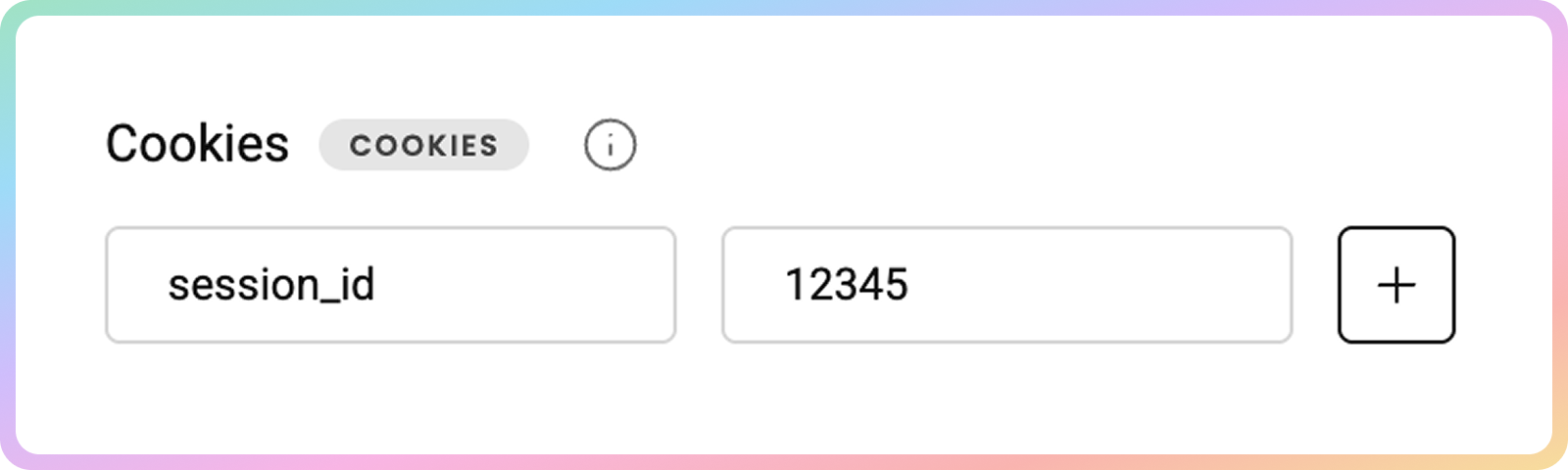

Cookies

- Specify custom cookies along with their values.

- You can also force cookies. But note that when forcing headers or cookies, the request will always be charged to your subscription, even if the request fails.

Successful Status Codes

- You can also specify custom status codes.

- Sometimes, websites return the required content together with a non-standard

HTTPSstatus code.- If one of your targets does that, you can indicate which status codes are acceptable and valuable to you:

501,502,403and etc.

Markdown

- Check the toggle to automatically parse

HTMLcontent intoMarkdown.

Markdownreduces token count compared to rawhtml.- Learn more here.

Sending a Request

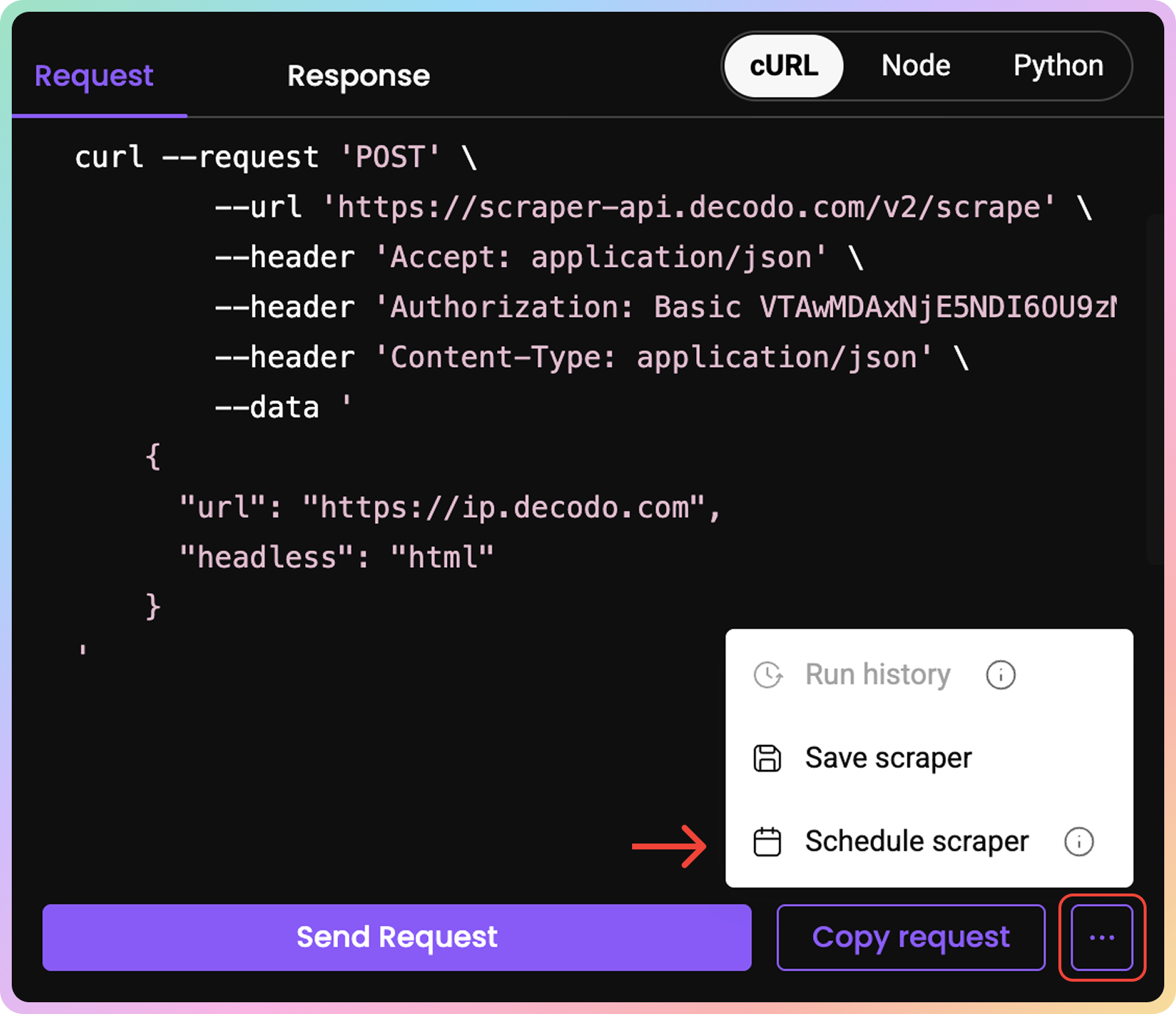

- Once you're all set, press Send Request. After a few moments, you'll see the returned response and live preview.

- If you select the Request tab, you may also copy the code command by clicking Copy request.

- You can copy the response or download it.

- If you chose a specific template, you might see the output in multiple formats such as live preview,

JSON, or parsed tables.

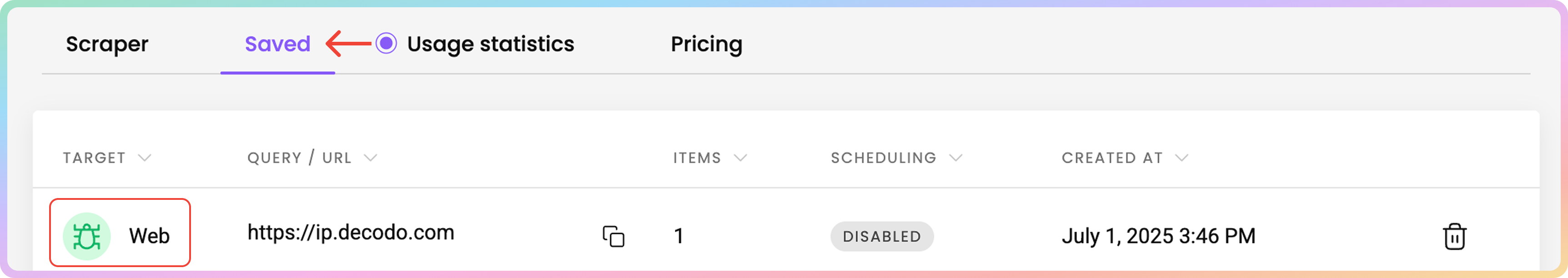

Saving Templates

- If you need to reuse the scraper, save it by clicking the three-dots button and Save scraper.

- Then, simply return to the Saved section and access your saved template by clicking on the Target icon.

Scheduling Templates

- Once the template is saved, you may schedule future scrapes by clicking on the saved scraper in the Saved section on the target icon.

- Then, click the three-dots and choose Schedule Scraper.

- Specify how often you want the scraper to run and select the data delivery method.

Schedule – hourly, daily, weekly, monthly or custom cron.

Delivery Method – email, webhook or Google Drive.

- The schedule is executed in

UTC.

- When ready, click Save to enable Scheduling. To turn off the scheduling, click the

ONtoggle

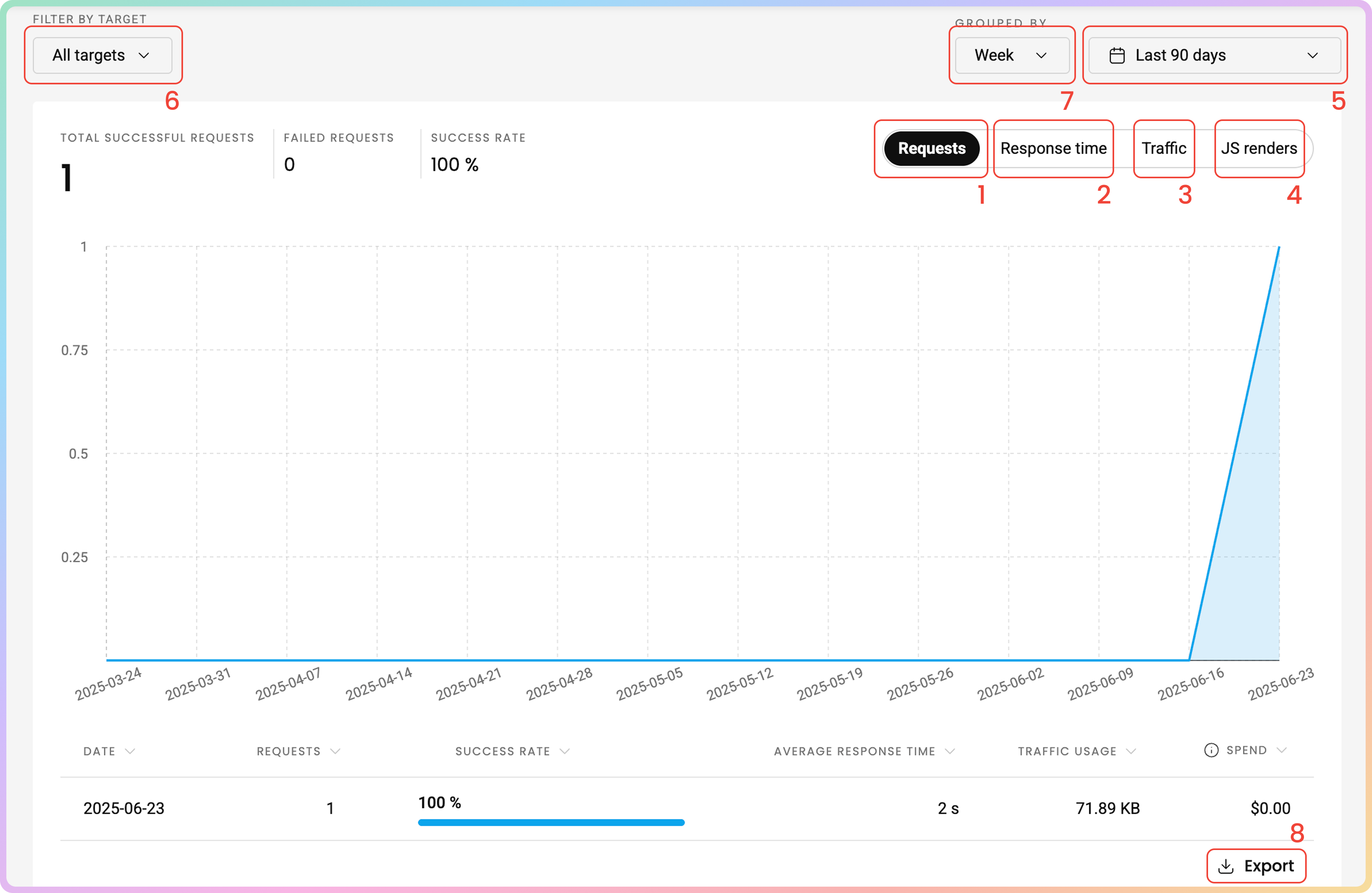

Usage Statistics

To track your traffic usage, go to the Usage Statistics tab.

Graph

Here, you can see various statistics displayed in a graph:

- The number of requests you've sent (total successful requests, failed requests, and success rate).

- The average response time.

- The traffic used

- The number of

JavaScriptrenders- Choose the time period.

- Filter by target.

- Group by hour, day, week, or month.

- Export the table data in

.CSV.TXT, or.JSONformats.

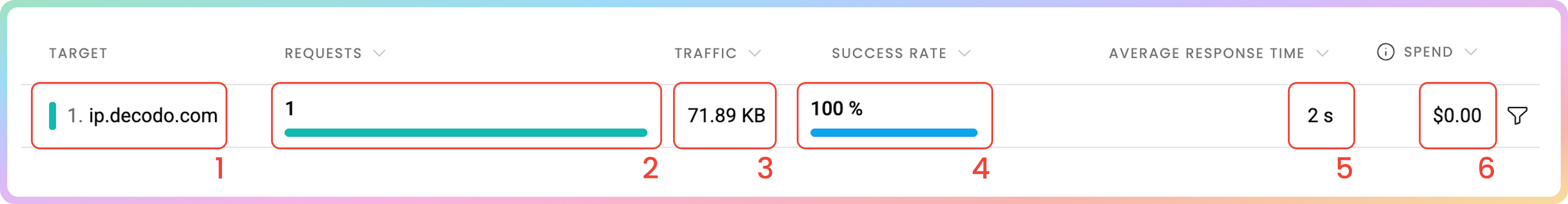

Top Targets

At the bottom of the page, you can understand usage patterns for specific websites in the Top Targets section:

- The target.

- The number of requests.

- Traffic – The volume of data consumed.

- Success rate – The percentage of successful requests.

- Average response time – The average time taken for a response.

- Spend in

$– The total cost associated with the traffic. Amounts displayed are without VAT.

Statistics Via Requests

POSTendpoint to retrieve Targets:https://dashboard.decodo.com/subscription-api/v1/api/public/statistics/target

- Learn more about

URLparameters for statistics here.

Integrations

Decodo MCP Server Guide for Web Advanced

- This repository provides a Model Context Protocol (MCP) server that connects LLMs and applications to Decodo's platform. The server facilitates integration between MCP-compatible clients and Decodo's services, streamlining access to our tools and capabilities.

- Check it out here on our GitHub.

Troubleshooting

If you are experiencing any issues, please refer to our troubleshooting section or contact our support team directly, which is available 24/7.

Support

Still can't find an answer? Want to say hi? We take pride in our 24/7 customer support. Alternatively, you can reach us via our support email at [email protected].

Updated 4 months ago